What is Artificial Intelligence?

A recent Financial Conduct Authority (FCA) discussion paper, DP22/4: Artificial Intelligence, offered the following definition of Artificial Intelligence (AI):

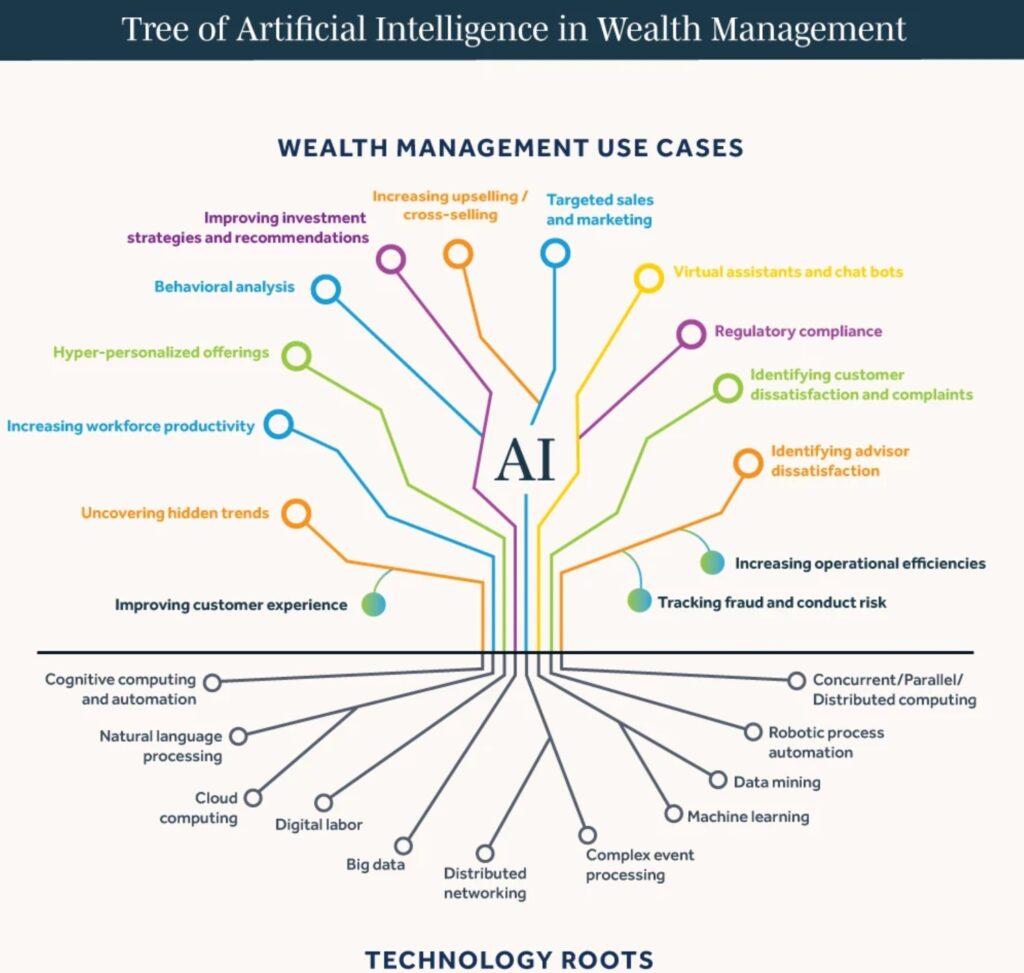

‘It is generally accepted that AI is the simulation of human intelligence by machines, including the use of computer systems, which have the ability to perform tasks that demonstrate learning, decision-making, problem solving, and other tasks which previously required human intelligence. Machine learning is a sub-branch of AI.

AI, a branch of computer science, is complex and evolving in terms of its precise definition. It is broadly seen as part of a spectrum of computational and mathematical methodologies that include innovative data analytics and data modelling techniques.’

- WHAT DO FIRMS NEED TO CONSIDER?

Many PIMFA members are already using AI systems and tools in their everyday operations, and it is likely that the adoption of AI in financial services will increase rapidly over the next few years.

PIMFA firms already use AI tools for several purposes. For example, they analyse large volumes of data quickly, easily, and accurately, which enables their employees to spend more time working with and for their clients.

There are concerns that as AI becomes more advanced, it could introduce new risks, for example:

system develops biases in its decision making

leading firms to make bad decisions

rESULTING IN Poor outcomes for their clients

This is why it is essential that firms deploying AI systems have a suitably robust control framework around their AI components to keep a careful check on what they are doing.

As with any innovation, AI has the potential to make fundamental and far ranging improvements in how firms can serve their clients. However, we must ensure it is continually monitored and checked regularly to manage the risk and maximise the benefit.

- What are regulators doing?

A number of government departments are asking regulators such as the Financial Conduct Authority (FCA), Bank of England (BoE), Information Commissioners Office (ICO) and Competition and Markets Authority (CMA) to publish an update on their strategic approach to AI and the steps they are taking according to the White Paper. The Secretary of State is asking for this update by 30 April 2024.

On 13 March 2024, the EU Parliament approved the EU Artificial Intelligence Act. The EU AI Act sets out a comprehensive legal framework governing AI, establishing EU-wide rules on data quality, transparency, human oversight and accountability. It features some challenging requirements, has a broad extraterritorial effect and potentially huge fines for non-compliance.

latest news

FCA – Feedback Statement (FS25/5) AI Live Testing

The FCA has published a Feedback Statement regarding responses received to their April 2025 Engagement Paper proposing AI Live Testing.

The statement notes that:

- Respondents welcomed the proposal for AI Live Testing, viewing it as a constructive and timely step toward increasing transparency, trust and accountability in the use of AI systems.

- Real-world insights, overcoming proof of concept (PoC) paralysis, creating trust, addressing first-mover reluctance and regulatory comfort were highlighted as key benefits and opportunities by respondents.

Next steps

- Applications for the first cohort of AI Live Testing close on 15 September 2025 and work with firms in the first cohort will start from October.

- Applications for the second cohort will open before the end of 2025.

- An evaluation report on AI Live Testing will be published after 12 months.

For further details, read the statement here

FCA – AI adoption – regulatory approach

The FCA has published advice on its regulatory approach to the adoption of AI in UK financial markets.

The regulator highlights that its approach is principles-based and focused on outcomes to give firms flexibility to adapt to technological change and market developments, rather than detailed and prescriptive rules. The FCA also confirmed that it has no plans to introduce extra regulations for AI. The regulator intends to rely on existing frameworks in order to mitigate many of the risks associated with AI and notes the relevance of Consumer Duty and the Senior Managers and Certification Regime (SM&CR) for the safe use of AI.

Read more details here.

An AI for an AI- Artificial Intelligence as a sword and a shield in the battle against fraud

Read this article by Alan Baker & Hannah Bohm-Duchen, Farrer & Co, in our lastest Journal.

Bank for International Settlements Innovation Hub – Project Noor (AI models for financial supervision)

The Bank for International Settlements (BIS) Innovation Hub has launched Project Noor, which aims to equip financial supervisors with independent, practical tools to assess AI models used by banks and other financial institutions.

Using explainable AI methods and risk analytics, the objective is to deliver a prototype through which supervisors can verify model transparency, assess fairness and test robustness.

The prototype should help to facilitate greater transparency, consistent protection and responsible innovation.

More information can be found here.

Redefining Risk: Women, AI, and the democratisation of investing

Read this article by Zoe Morton, RSM, in our lastest Journal.

PIMFA

PIMFA