What is Artificial Intelligence?

A recent Financial Conduct Authority (FCA) discussion paper, DP22/4: Artificial Intelligence, offered the following definition of Artificial Intelligence (AI):

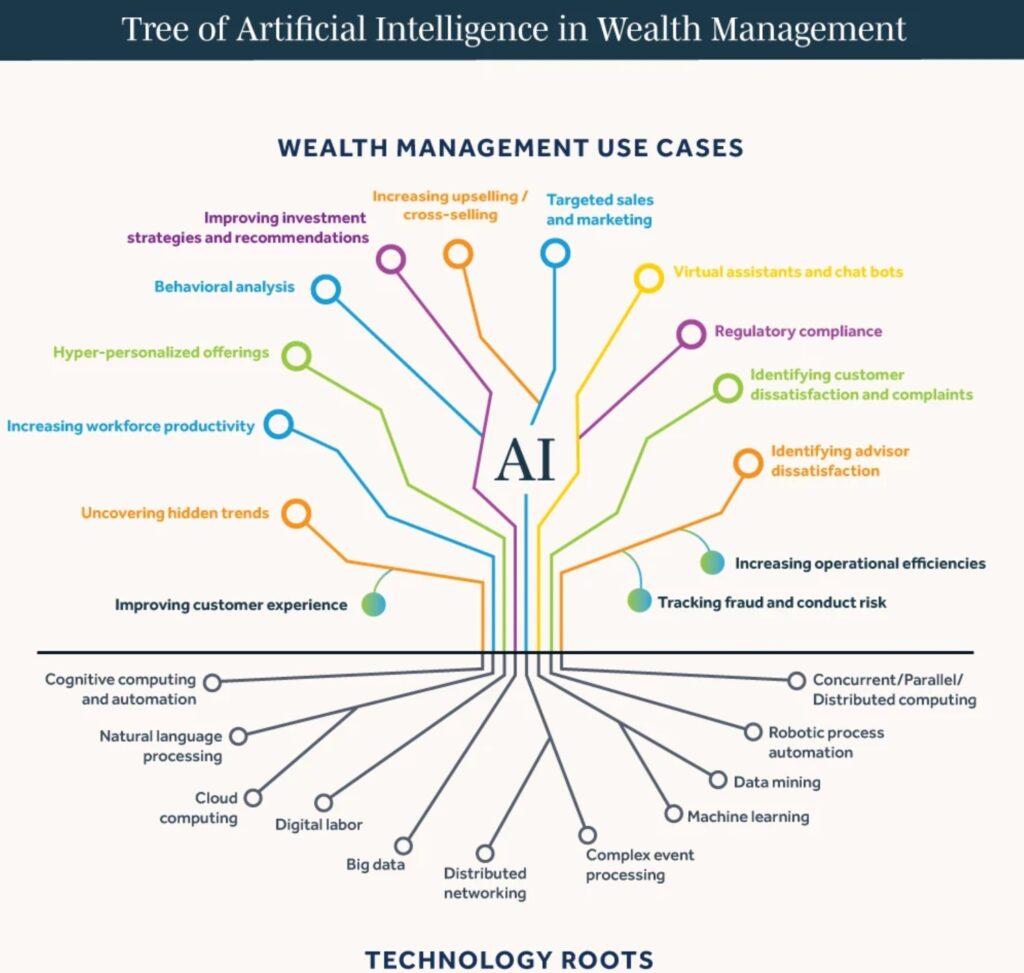

‘It is generally accepted that AI is the simulation of human intelligence by machines, including the use of computer systems, which have the ability to perform tasks that demonstrate learning, decision-making, problem solving, and other tasks which previously required human intelligence. Machine learning is a sub-branch of AI.

AI, a branch of computer science, is complex and evolving in terms of its precise definition. It is broadly seen as part of a spectrum of computational and mathematical methodologies that include innovative data analytics and data modelling techniques.’

- WHAT DO FIRMS NEED TO CONSIDER?

Many PIMFA members are already using AI systems and tools in their everyday operations, and it is likely that the adoption of AI in financial services will increase rapidly over the next few years.

PIMFA firms already use AI tools for several purposes. For example, they analyse large volumes of data quickly, easily, and accurately, which enables their employees to spend more time working with and for their clients.

There are concerns that as AI becomes more advanced, it could introduce new risks, for example:

system develops biases in its decision making

leading firms to make bad decisions

rESULTING IN Poor outcomes for their clients

This is why it is essential that firms deploying AI systems have a suitably robust control framework around their AI components to keep a careful check on what they are doing.

As with any innovation, AI has the potential to make fundamental and far ranging improvements in how firms can serve their clients. However, we must ensure it is continually monitored and checked regularly to manage the risk and maximise the benefit.

- What are regulators doing?

A number of government departments are asking regulators such as the Financial Conduct Authority (FCA), Bank of England (BoE), Information Commissioners Office (ICO) and Competition and Markets Authority (CMA) to publish an update on their strategic approach to AI and the steps they are taking according to the White Paper. The Secretary of State is asking for this update by 30 April 2024.

On 13 March 2024, the EU Parliament approved the EU Artificial Intelligence Act. The EU AI Act sets out a comprehensive legal framework governing AI, establishing EU-wide rules on data quality, transparency, human oversight and accountability. It features some challenging requirements, has a broad extraterritorial effect and potentially huge fines for non-compliance.

latest news

Leading Lights Forum Report 2025/26

AI: Evolution, Revolution or Devastation? Read the new Leading Lights Report

Treasury Select Committee AI in Financial Services Guide

The Treasury Select Committee (TSC) has published a report on AI regulation in financial services. This follows the inquiry (February 2025) which examined the opportunities and risks posed by AI.

The TSC:

- notes that AI and wider technological developments could bring considerable benefits to consumers

- encourages firms and the FCA to work together to enable the UK to capitalise on AI opportunities

- states that given the risks presented by AI, a wait-and-see approach (by HM Treasury [HMT]) and the regulators) to AI in financial services can expose consumers and the financial system to potentially serious harm

The report also sets out recommendations:

- The FCA should publish comprehensive, practical guidance for firms on:

– the application of existing consumer protection rules to their use of AI

– accountability and the level of assurance expected from senior managers under the SMCR for harm caused through the use of AI - The regulators should conduct AI-specific stress-testing to boost business readiness for any future AI-driven market shock.

- HMT should designate the major AI and cloud providers as critical third parties for the purposes of the Critical Third Parties Regime.

Read the full report here.

FCA Review of AI: Mills Review

The FCA has opened a review examining how advanced AI might affect consumers, retail financial markets, and regulatory bodies. Led by Sheldon Mills, the review invites input through an Engagement Paper focused on four connected areas:

- The potential future development of AI

- How these changes might influence markets and firms

- The consequences for consumers

- How financial regulators may need to adapt

The FCA is seeking feedback by 24 February 2026, which will feed into recommendations for the FCA Board in Summer 2026, followed by a public report.

If you have any comment or would like to discuss this review further, please reach out to Maria Fritzsche.

The AI Advantage: How to Revolutionise Business Growth with Reverification

Read here an article from the PIMFA Journal #32 by Alexander Blayney, Global Partnerships & Enterprise Sales at Id-pal, looking at how, with the increasing prevalence of identity fraud, AI can help revolutionise business growth when applied to identity reverification

FCA AI Live Testing: Applications Open for Second Round

The FCA has opened applications for the second cohort of its AI Live Testing service. Participating firms receive tailored guidance from FCA specialists and technical partner Advai to help them develop, assess and implement safe, well‑governed AI systems.

The service supports firms in addressing key areas such as AI governance, risk management and ongoing monitoring, ensuring technologies are introduced responsibly and with consumer and market integrity in mind.

AI Live Testing runs alongside the FCA’s Supercharged Sandbox, which supports firms still in the exploratory and experimentation stages of AI adoption.

To find out more click here.

PIMFA

PIMFA