What is Artificial Intelligence?

A recent Financial Conduct Authority (FCA) discussion paper, DP22/4: Artificial Intelligence, offered the following definition of Artificial Intelligence (AI):

‘It is generally accepted that AI is the simulation of human intelligence by machines, including the use of computer systems, which have the ability to perform tasks that demonstrate learning, decision-making, problem solving, and other tasks which previously required human intelligence. Machine learning is a sub-branch of AI.

AI, a branch of computer science, is complex and evolving in terms of its precise definition. It is broadly seen as part of a spectrum of computational and mathematical methodologies that include innovative data analytics and data modelling techniques.’

- WHAT DO FIRMS NEED TO CONSIDER?

Many PIMFA members are already using AI systems and tools in their everyday operations, and it is likely that the adoption of AI in financial services will increase rapidly over the next few years.

PIMFA firms already use AI tools for several purposes. For example, they analyse large volumes of data quickly, easily, and accurately, which enables their employees to spend more time working with and for their clients.

There are concerns that as AI becomes more advanced, it could introduce new risks, for example:

system develops biases in its decision making

leading firms to make bad decisions

rESULTING IN Poor outcomes for their clients

This is why it is essential that firms deploying AI systems have a suitably robust control framework around their AI components to keep a careful check on what they are doing.

As with any innovation, AI has the potential to make fundamental and far ranging improvements in how firms can serve their clients. However, we must ensure it is continually monitored and checked regularly to manage the risk and maximise the benefit.

- What are regulators doing?

A number of government departments are asking regulators such as the Financial Conduct Authority (FCA), Bank of England (BoE), Information Commissioners Office (ICO) and Competition and Markets Authority (CMA) to publish an update on their strategic approach to AI and the steps they are taking according to the White Paper. The Secretary of State is asking for this update by 30 April 2024.

On 13 March 2024, the EU Parliament approved the EU Artificial Intelligence Act. The EU AI Act sets out a comprehensive legal framework governing AI, establishing EU-wide rules on data quality, transparency, human oversight and accountability. It features some challenging requirements, has a broad extraterritorial effect and potentially huge fines for non-compliance.

latest news

FCA Speech – Harnessing AI and technology

The FCA has published a speech by their Chief Data, Information and Intelligence Officer, Jessica Rusu.

The speech focused on harnessing AI and technology to deliver the FCA’s 2025 strategic priorities and noted initiatives such as the Supercharged Sandbox (a collaboration between the FCA and Nvidia).

This will commence in October 2025 with complementary AI Live Testing offering open for applications the w/c 7 July 2025.

With regards to authorisations and supervision, the FCA advised of:

- Testing large language models to analyse text and deliver efficiencies

- The use of predictive AI to assist supervisors

- Using conversational AI bots to redirect consumer queries to relevant agencies such as the Financial Ombudsman Service (FOS).

Read the speech here.

FCA: Supercharging AI Innovation: FCA Partners with Nvidia

At London Tech Week 2025, Jessica Rusu, the FCA’s Chief Data, Information and Intelligence Officer, unveiled major steps the regulator is taking to support safe, responsible AI in financial services, including a new collaboration with Nvidia.

FCA AI Lab launched in January, featuring four key zones:

- AI Sprint – collaborative events shaping outcome-based AI regulation

- AI Input Zone – identifying transformative AI use cases

- AI Spotlight – a live repository of real-world AI applications

- Supercharged Sandbox – an upgraded space for early-stage AI experimentation. Applications are now open for firms to test their proof-of-concept AI solutions in the Supercharged Sandbox from October 2025.

Rusu’s speech also mentioned the new partnership with Nvidia that will bring advanced AI tools and GPU computing power to the Supercharged Sandbox, accelerating development for start-ups and innovators. The AI Live Testing allows firms to work with the FCA on real-time AI model testing, creating a shared understanding of responsible AI use.

The FCA is showing that regulation can drive innovation with the UK positioned as a global hub for FinTech and AI leadership.

Read the full speech here.

PIMFA response to the FCA Engagement Paper – Proposal for AI Live Testing

PIMFA has submitted a response to the FCA’s engagement paper on its proposed AI Live Testing regime. We welcome the regulator’s proactive and open approach to AI regulation. The proposal reflects a forward-thinking response to a rapidly evolving area.

However, we continue to have concerns regarding the deployment of AI models more broadly. In our response, we call for greater clarity around key issues such as liability and accountability, vendor confidence, model bias, hallucinations, and broader AI-related risks. We also emphasise that innovation in financial services does not necessarily depend on AI.

Our full response can be found here.

New FCA blog on tech, trust and teamwork

Nikhil Rathi, FCA CEO, in conjunction with John Edwards, UK Information Commissioner, has published a blog looking at how they can help firms to use AI responsibly, while protecting consumers and fostering innovation. The post highlights that uncertainty and lack of familiarity are the main blockers to innovation.

There is a call to firms and trade bodies to work with the regulator to keep communication channels open, not just when there’s a problem but earlier in the innovation journey.

Read the full blog here.

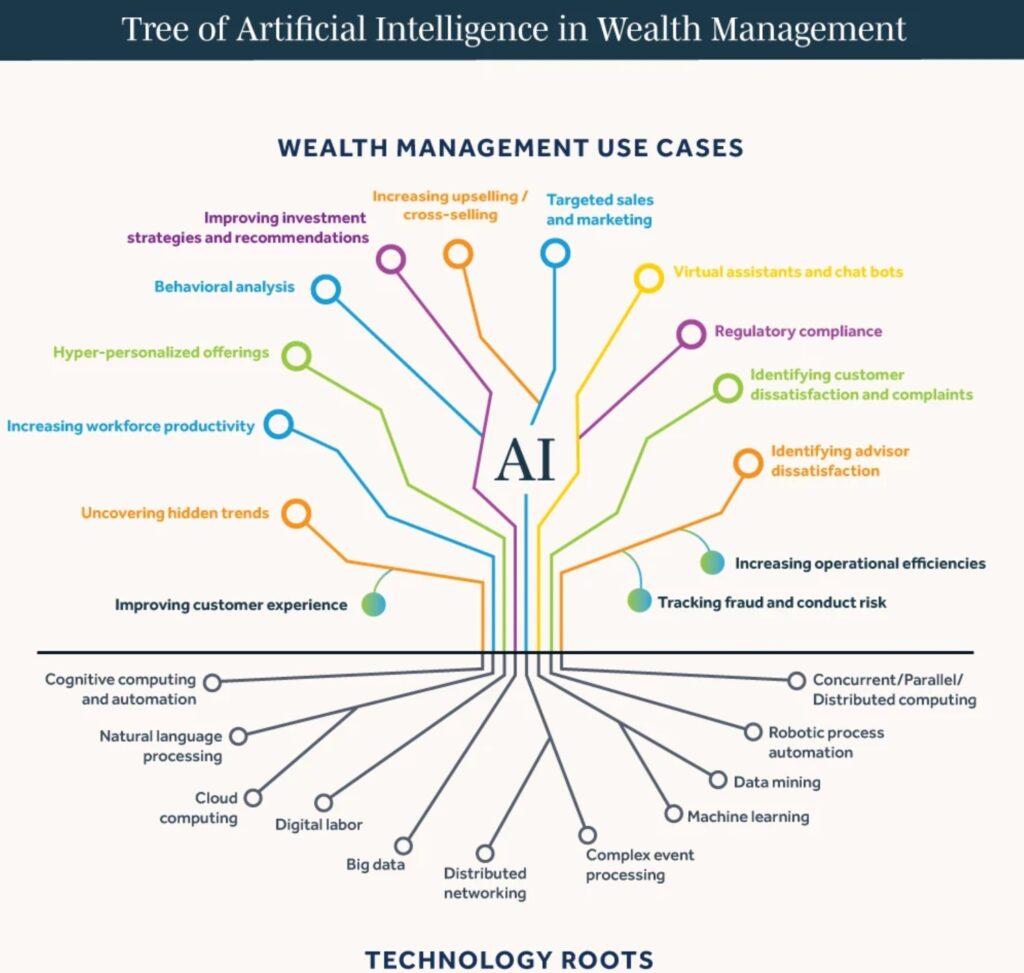

The potential for AI in UK wealth management

Read this article in our recent Journal by Suman Rao, Managing Director at Avaloq.

New PIMFA Blog: AI and the Environment: A Double-Edged Sword for the Investment Industry

Whilst a recent survey reveals that 62% of wealth management firms believe AI will significantly reshape their operations, the infrastructure powering AI, such as data centres, poses a substantial environmental challenge, generating vast amounts of e-waste and consuming significant energy.

In this blog, PIMFA’s Senior Policy Adviser, Maria Fritzsche, delves deeper into the problems and potential solutions.

Read the blog here

Find out more about our next Women Symposium here

PIMFA

PIMFA